AI Hallucinates – But So Do You!

AI hallucinates constantly – but so do humans. Therefore, why are we so alarmed when a machine mimics what the human brain has been doing for ages?

Read time: 4 minutes

AI hallucinates all the time – but so do we: so why are we so afraid of it?

In many conversations about artificial intelligence (AI), the term “hallucination” pops up sooner or later. People say AI hallucinates because it generates responses that are factually incorrect. But what does that actually mean? And more importantly: why does it bother us so much, when we humans constantly make guesses based on patterns too?

What does it mean when AI “hallucinates”?

A model like ChatGPT is trained on massive amounts of text data. It doesn’t learn facts like an encyclopedia — it learns patterns: when someone says this, that usually follows. When AI generates an answer, it’s basically making a very smart guess: based on everything it has seen, what is the most likely next word?

That’s not a bug — it’s how the system is designed to work. But it means that sometimes AI makes up things that sound right but aren’t. That’s what we call a hallucination.

But hold on… don’t humans do the same?

Actually, yes. We humans also rely on predictive knowledge. We constantly make assumptions based on experience, intuition, and incomplete information. Smell coffee in the morning? You assume your housemate is up. Hear drops on the window? You assume it’s raining. Knock over a glass? You expect the table to be wet. Even in conversations, we often answer without knowing the full truth — based on guesses and gut feeling.

In fact, our brain connects what we perceive to what we’ve experienced before. This is called predictive processing. What we see isn’t just raw data — it’s shaped by expectation and memory. Our minds automatically fill in the blanks. Think of recognizing a familiar face from far away, or reading a word with missing letters — our brains do the rest.

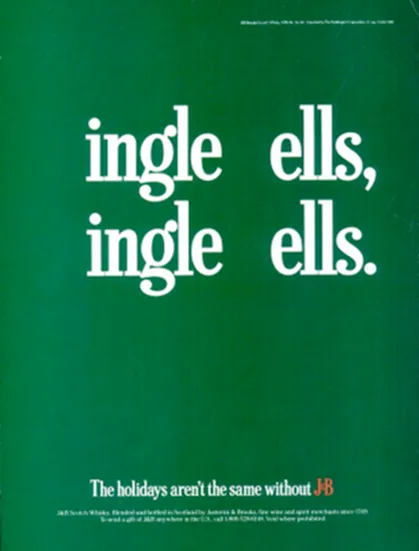

In other words: we were hallucinating long before AI came around. Just look at the example below — how would you fill this in?

Source: 1989 J&B Scotch Whiskey

So why are we afraid of AI?

We constantly fill in the gaps in what we perceive — consciously or not. So why does it feel unsettling when AI does the same?

- Scale and speed

A human might hallucinate once in a conversation. AI can run millions of conversations at once — producing millions of hallucinations per second. That makes the impact much bigger.

- Lack of awareness

When people are unsure, you can often hear it: “I think…” or “I’m not sure…” AI always sounds confident. That illusion of certainty makes errors harder to detect.

- Loss of control

Most people don’t fully understand how AI works — and what we don’t understand tends to scare us. We fear decisions being made by invisible logic, unnoticed by anyone. In areas like justice, healthcare, or finance, that’s a real concern.

What can we learn from this?

The lesson isn’t that AI is dangerous because it hallucinates. The danger lies in blindly trusting systems that don’t understand truth, context, or consequences.

But the comparison with people gives us hope. Just like we’ve learned to think critically, spot mistakes, and correct each other — we can apply the same to AI. With proper training, clear guidelines, and human oversight, AI doesn’t have to be perfect — just trustworthy enough.

Conclusion

Don’t see AI as an all-knowing being, but as a smart assistant who thinks fast and creatively — and sometimes gets things wrong. Be critical. Ask questions. Use your own judgment.

Because whether it’s human or machine: what we perceive is part reality, part interpretation. Being aware of that is the key to using AI responsibly.

Curious about how AI can help your company? Get in touch with us today and discover how AI can support your goals!