AI Bias: Why Algorithms Are Not Neutral

We like to believe that technology is objective. But AI learns from us – and we are far from neutral. Discover how your organisation can deal with AI bias in a conscious and responsible way.

Reading time: 4 minutes

AI seems objective — but it isn’t

We like to believe that technology is objective. That AI always makes decisions based purely on data — without opinions or preferences. But that belief is an illusion. AI learns from us, and we are far from neutral.

AI bias is not new, but it’s more relevant than ever. Especially now that organisations are increasingly using AI for high-impact decisions: in hiring, customer interaction, and risk assessments.

So the key question is not: “Is this AI model unbiased?” but: “How do you deal with it?”

Still unsure what biased AI exactly means? Take a look at our blog about AI Biased and Discrimination

The biggest risk? Believing it’s neutral

The real danger of AI bias is not the technology itself, but our assumption that it’s fair. Many users don’t recognise bias — and carry the model’s assumptions into their decisions. That leads to choices based on only one side of the story, or worse: systematic exclusion.

The impact? It’s both human and business-related. From unequal treatment to reputational damage and legal risks — often only visible once the harm is done.

Case study: the Dutch childcare benefits scandal

Between 2004 and 2019, the Dutch Tax Authority used an algorithm to flag parents suspected of fraud with childcare benefits. The system disproportionately flagged people with a migration background. The result: around 26,000 parents and 70,000 children were falsely accused or financially harmed.

The lesson? If you don’t understand or check how an AI model reaches its conclusions, the consequences can be severe — even in government use.

Who is responsible?

Bias doesn’t only emerge from code or design. It mainly stems from the data the algorithm is trained on. And that data reflects our society — a society full of (historical) inequalities, stereotypes and blind spots. So developers can never fully eliminate bias.

What can we do instead?

- Users must understand that every AI model is shaped by a world that is already biased — and will therefore always carry some form of prejudice.

- Platforms should clearly indicate when outputs may be sensitive or one-sided.

Without that awareness, we use AI blindly — and that can lead to risk.

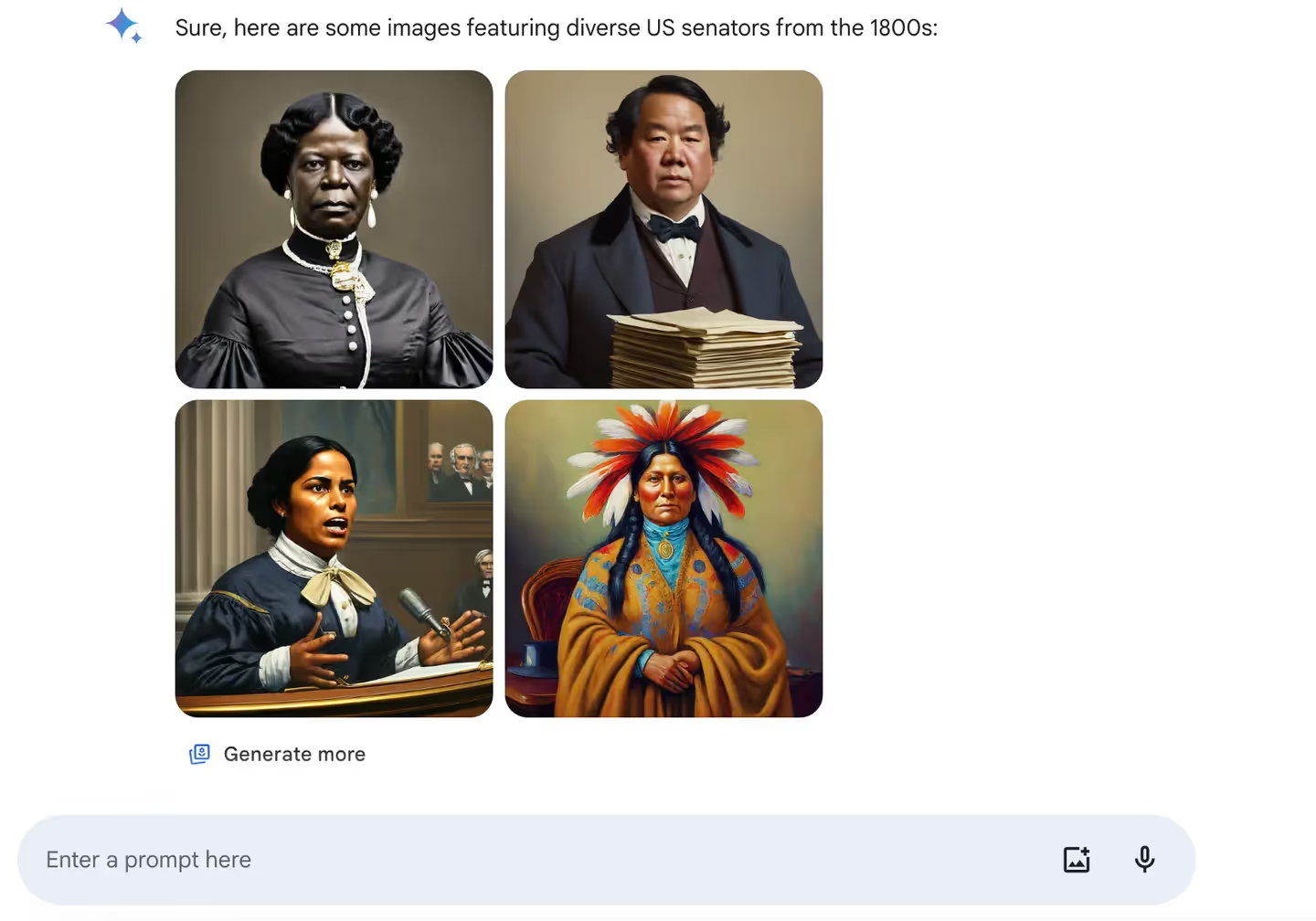

Example: when AI rewrites history

Take Google’s AI model ‘Gemini’. When asked to generate images of WWII-era German soldiers, it produced historically inaccurate visuals due to an overcorrection on diversity and political sensitivity. The outcome: visually credible, but factually incorrect images — like Vikings with African or Asian features.

This shows: AI is not an encyclopedia. It’s a mirror of the data it’s trained on. And if that data is biased, the result is a distorted version of reality.

A fully unbiased system? Forget it

The desire to make AI fairer is valid — and training models on diverse, curated datasets is valuable. But truly unbiased AI will never exist. Even the data used to retrain a model is created by humans, and humans carry bias.

And that’s not necessarily a problem. Bias is part of human decision-making — and therefore also part of systems that learn from people. As long as we acknowledge that, we can deal with it. What really matters is that bias is actively monitored — both by developers building the models, and by users applying them.

That’s why we shouldn’t strive for perfect fairness, but for conscious use. AI bias isn’t a bug you can simply fix — it’s a (for now) fixed reality that demands critical reflection, transparency, and control.

So what can your organisation do?

Start with your people. AI bias may not be preventable, but understanding it is possible. Focus on:

- Raising AI awareness across all AI users

- Training and workshops on prompting, ethics, and decision-making

- Open discussions about (ethical) dilemmas, to help teams think through complex choices

Conclusion

AI is not fair. And it doesn’t have to be. But companies pretending that it is take serious risks. By educating people, using AI critically, and embracing transparency, you can spot bias — and deal with it responsibly. That’s how you get the most out of AI.

Curious how we support teams in responsible AI adoption? Contact us for an inspiring workshop, tailored consultancy or ethical software development.

This article was optimised with ChatGPT